Load Balancing Microservices Architecture Performance

Share This Article

Table of Contents

Subscribe to Our Blog

We're committed to your privacy. SayOne uses the information you provide to us to contact you about our relevant content, products, and services. check out our privacy policy.

The microservices architecture presents any business application as a set of loosely-coupled services. These are being adopted by business organizations large and small for their many advantages, such as ease of hyperscaling, and continuous delivery for complex business applications, among others.

In a series of surveys conducted by the IBM Market Development & Insights team, about 27% of users said they’re seeing improvements from microservices across many areas of their business, particularly in the greater flexibility to scale resources up or down.

However, it is being observed that the complexity of a distributed architecture system that comes along with rapidly fluctuating business needs, time-to-market restrictions, and scaling requirements is best managed with a load balancing microservices architecture.

What is Load Balancing?

The process of sharing the incoming traffic (in discrete time) between servers using a server pool is load balancing. This process of sharing can be evenly scaled or executed using specific rules such as Round Robin, Least Connections, etc.

Download our E-Book for FREE "How to choose the best microservices vendor and trim the costs."

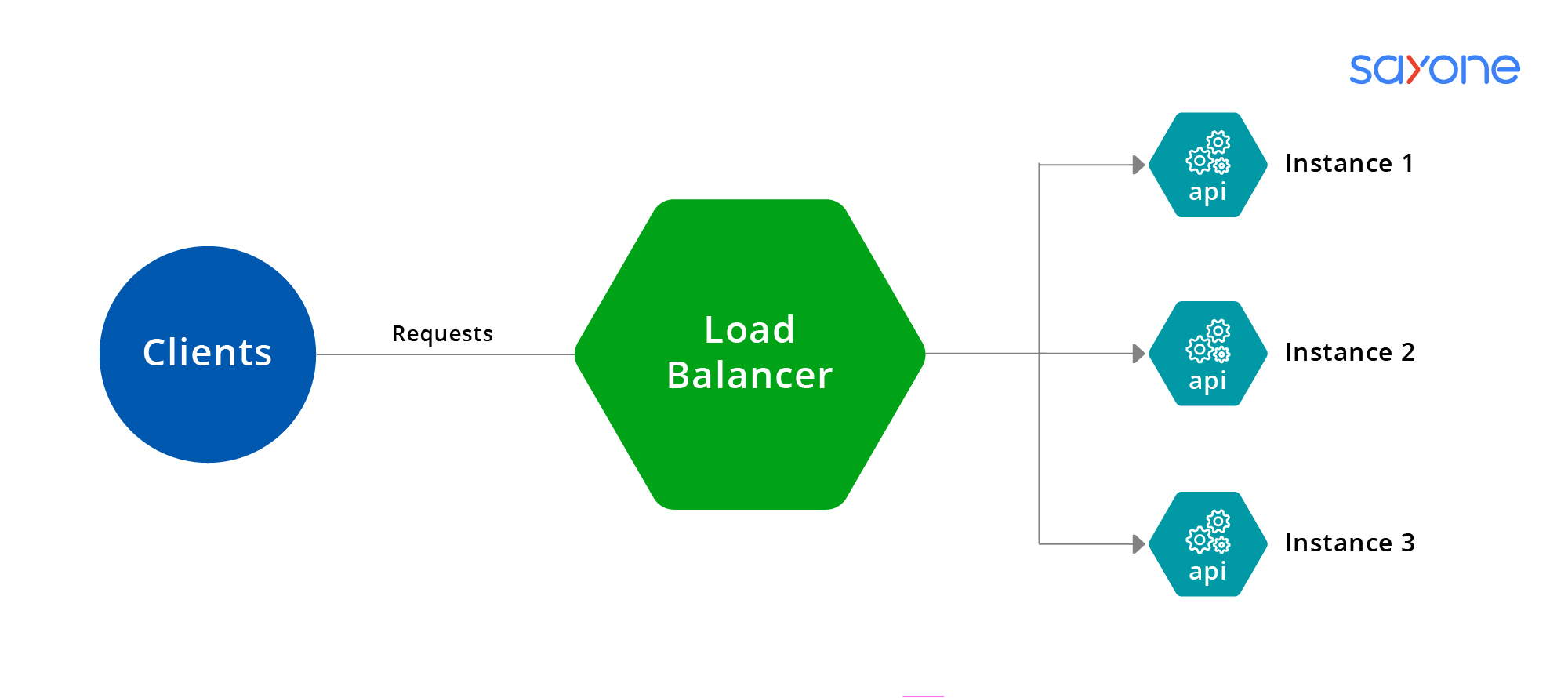

Two types of load balancing microservices architecture are used: server side load balancing and client side load balancing.Server side load balancing involves the classical load balancing approach wherein the traffic is distributed using a load distributor that is placed in front of the servers. This distributor routes the load equally or according to certain rules to the servers which then perform the work.

Common examples of server side load balancers used are nginx and netscaler.

Client-side Load Balancing

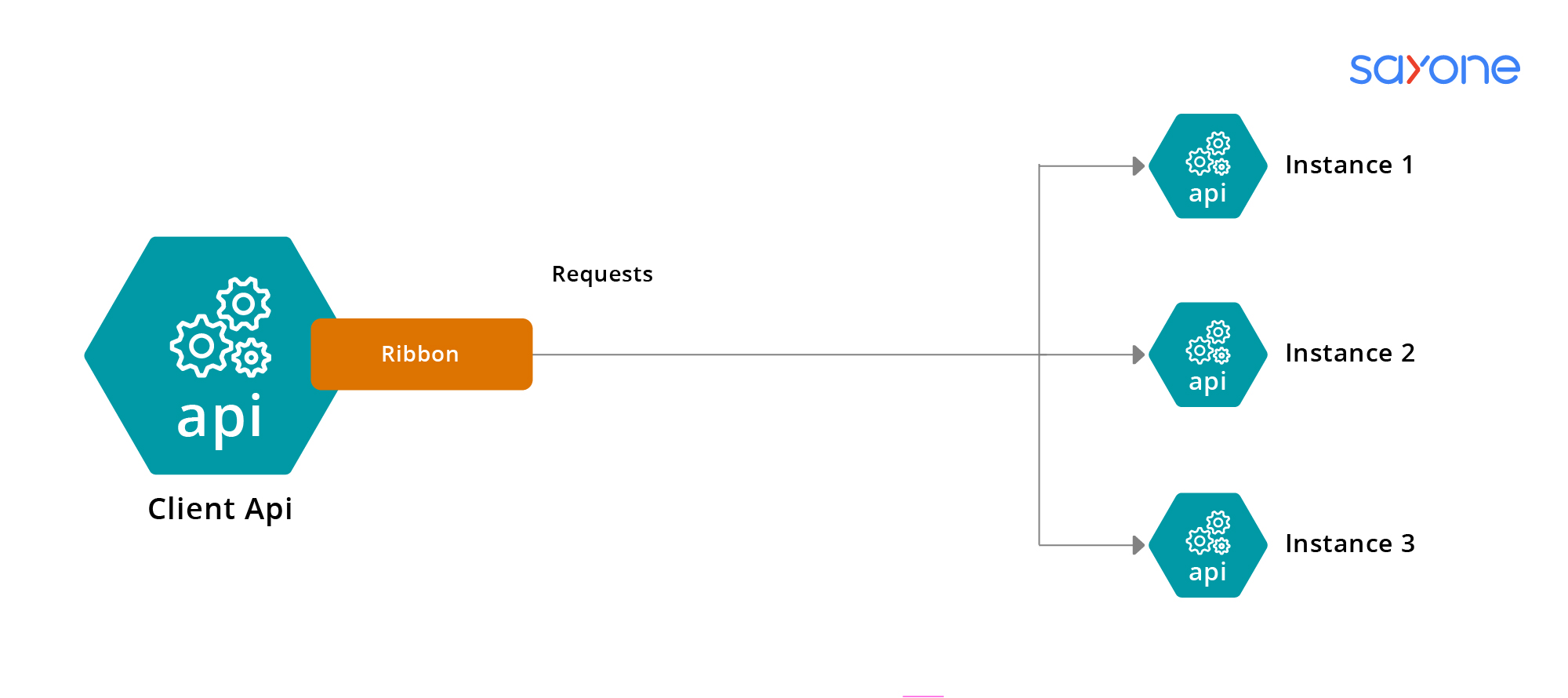

In client side load balancing, the client handles the entire load balancing and the client API is expected to know all the instances of server API addresses that are available hard-coded within a service registry.

Using this method, it is possible to escape bottlenecks and single points of failure that may occur. When using service discovery, you don’t have to know any information about the server API except the registered name of the API. The server registry mechanism provides all the information about the dedicated server API.

Advantages:

- Reduced Server Overhead: By giving the client more control over the load balancing logic, centralised load balancers—which can become bottlenecks in situations with high traffic—are spared the strain.

- Improved Scalability: Client-side load balancing allows clients to dynamically adjust to changes in the backend server pool without waiting for updates from a centralised load balancer, which improves system scalability.

- Enhanced Fault Tolerance: By enabling clients to install advanced failure detection and health checks, they can promptly detect and steer clear of sick or overloaded servers, hence enhancing the system's overall fault tolerance.

- Enhanced Performance: Client-side load balancing can enhance the overall performance of the application by lowering network latency and speeding up response times by choosing backend servers based on proximity, latency, and other performance parameters.

Load Balancing Microservices Architecture-When To Use

Load balancing challenges occur mostly when there are changes in the resource population and when the infrastructure grows according to increased demand. In such cases, if load is not shared evenly across the nodes, then end-user experience can be impacted negatively and application performance eventually suffers.

Understanding the Load

It is vital to study how the load is balanced across each of the nodes for each service application using monitoring tools. Traditional tools used for monitoring may be focused on per-node availability and errors that are occurring. These tools help to check whether the nodes in the microservices are working. However, one cannot know the state of the service or the current load on each of the nodes versus the other nodes in the cluster that ultimately result in degraded performance for users.

A cluster’s load-balance can be effectively studied by looking at the ratio of the standard deviation to its mean. This is called the coefficient of variance. This measure gives you the effectiveness ratio for the load across the nodes. Whereas a low ratio indicates minimal differences in load among the various nodes and tells us that the load is well-balanced among nodes, a high ratio indicates just the opposite—significant differences in load among nodes exist, telling us there is a cause for concern.

Download our eBook for FREE: “Global Software Development Rates – an Overview”.

Calculating the load balancing effectiveness is done to provide clarity of the load behaviors for a specific application. By monitoring this ratio over a period, it is possible to draw an inference about how product demand changes impact the load balancing. Also, it makes it possible to trap performance problems before they cause serious issues for active users.

Dynamic Changes

By definition, cloud-hosted infrastructure grows and shrinks according to the demands imposed on the application. Change is a constant, as we all know, whether it’s expected or unplanned. There are likely to be many variances in business demand (load) and this may be because of an unexpected outage of a component or a rapid surge/dip in demand.

Read our blog" Microservices vs SOA vs API Comparison"

Calculating and determining the load balancing effectiveness ratio over a period provides a starting level for managing a microservices environment in a steady fashion. However, when dealing with dynamic changes, a good strategy would be to compare the current ratio value with the average ratio values over a range of time. Such a moving average function helps to smoothen transient variations in the environment that occur without any alerts.

Knowing When an Issue is Going to Crop Up

Every extra minute that it takes to resolve any issue is something more than just downtime; it is causing a wider rift in customer trust, and lost revenue.

Alerts that are based on outliers/dynamic thresholds help to lighten/remove the burden of passive monitoring and provide an alert notification (of an issue) that requires immediate attention. Constructing an alert for a situation when the load balancing effectiveness ratio falls below a threshold value (based on percentile), helps the teams to begin constructing a solution for the issue.

This is very relevant in the case of companies functioning in the retail space that want to ensure a great customer experience. These organizations have to be notified of any issues affecting the end user in advance. This is critical during the holiday shopping season when there will be an increased likelihood of demand on the infrastructure. Retail companies, therefore, must understand their load, dynamic changes that could come along, and know when an issue may crop up.

Read our blog" Managing Data Consistency in a Microservice Architecture"

A moving average of the load balancing effectiveness ratio values may help to smooth transient anomalies. However, setting alerts against the effectiveness ratio values relative to the year that went by and relative to the day ago can work together to give appropriate notifications of real issues. As an example, the year-ago alert could give you an idea how you are performing relative to last year’s holiday shopping season at the same time last year. The day-ago alert could inform you about an emerging pattern when you have to increase capacity to accommodate new demands, say, from a one-day promotion set up for the holiday season.

Preventing an Emerging Problem

Removing a potential outage before it affects your customers saves you time, resources, trust, and your reputation. Analytics-based alerting will help to effectively take action before customers are negatively impacted. An aggregate view of all the metrics for the entire production environment in one place is a tool that you can set up. First identifying and then tracking service-wide patterns helps you to get notified of any significant trend before it fireballs into a widespread issue, and take proactive action.

Conclusion

Load balancing microservices architecture is ultimately the solution to ensure that these services can handle the load while also providing security and remaining available.

How can SayOne Help

At SayOne, we offer independent and stable services that have separate development aspects as well as maintenance advantages. We build microservices especially suited for individuals' businesses in different industry verticals. In the longer term, this would allow your organization/business to enjoy a sizeable increase in both growth and efficiency. We create microservices as APIs with security and the application built in.

We provide SDKs that allow for the automatic creation of microservices. We also design the microservices in a way so that they will be able to handle the load and security under the most severe demand conditions and still remain available to your clients.

Looking for web or mobile app development services? Get in touch for a free consultation with our experts.

Our comprehensive services in microservices development for start-ups, SMBs, and enterprises start with an extensive microservices feasibility analysis done to provide each of our clients with the best services. We use powerful frameworks for our custom-built microservices for different organizations. Our APIs are designed to enable fast iteration, easy deployment, and significantly less time to market. In short, our microservices are dexterous and resilient and deliver the security and reliability required for the different functions.

Share This Article

FAQs

Load balancers boost application performance by lowering network latency and boosting response time. They are responsible for a variety of vital responsibilities, including the following: To boost application performance, distribute the load evenly among servers. To minimize latency, redirect client requests to a server that is geographically nearby.

The load balancer assists servers in effectively moving data, optimizing the usage of application delivery resources, and preventing server overloads. Load balancers continuously monitor the health of servers to ensure they can handle requests.

Subscribe to Our Blog

We're committed to your privacy. SayOne uses the information you provide to us to contact you about our relevant content, products, and services. check out our privacy policy.